Welcome to our research project on the "Construction of E-Motion Unit AI," where we are developing an innovative technology to read human emotions from body motions. Nonverbal information plays an important role in interpersonal communication in our daily lives. Among these, the role of posture and body movements has received less attention than that of facial expressions. This is largely due to the fact that objective and general-purpose units of analysis, such as those frequently used in research on facial expressions, have not been established.

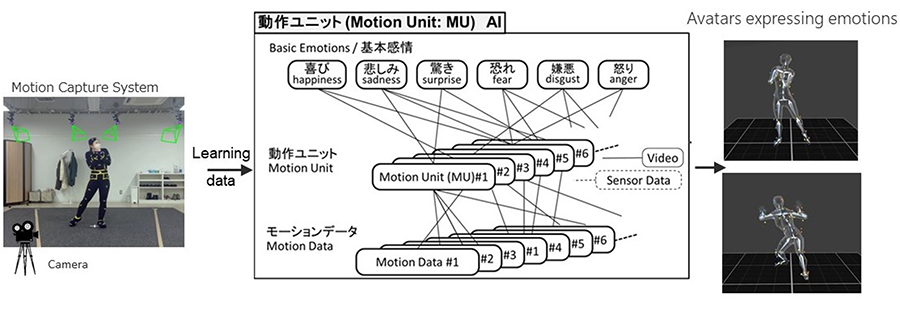

In this study, we define a "Motion Unit" as a unit of analysis for body movements that express emotion. In contrast to the traditional theory-driven approach, we adopted a cutting-edge data-driven approach using large-scale motion capture data. Our two-stage approach involves first extracting the specific movements required for expressing different emotions and identifying the units of analysis for these movements. This approach allows us to understand the physical actions needed for different emotional expressions. In the second stage, we will construct a body movement generator that manipulates these movement units, similar to a facial expression generator in facial expression research. By verifying the combination of movements that constitute different emotional expressions, we aim to advance our understanding of nonverbal communication.

Futhermore, “E-Motion Unit AI” could potentially provide a novel technology for smooth and humanized telecommunication in the future. For example, emotions can be estimated from a person's body movements and other sensor information, enabling us to coded and transmit movements that can decoded and extracted at a remote location and applied to an avatar. This method would allow avatars to perform emotionally rich actions without overburdening the communication channel and with robustness to changes in the communication environment, thereby realizing rich remote communication.

テーマ

Construction of “Motion Unit AI” to read emotions from human body motions.

主な研究成果・対外発表

- Yoshifumi Kitamura, Ken Fujiwara, Taku Komura: Emotion Estimation from Human Body Movements and Generation of Emotionally Rich Movements of Characters – Toward the Realization of Humanity-rich Telecommunication, Sensait, January 2023. (in Japanese)

- Ken Fujiwara, Miao Cheng, Chia-huei Tsemg, Yoshifumi Kitamura: AI Understands Human Emotions and Gets Close to People: Reading Emotions from Bodily Movements: The Development of the Motion Unit AI, Information Processing Society of Japan Magazine,Vol. 64, No. 2, February 2023. (in Japanese)

Results in Japanese are described in Japanese.