High-Performance Physically-based Rendering

Interactive applications such as virtual reality and games use a technique known as real-time rendering, which sacrifices image quality in favour of generating dozens of images per second. In contrast, in movies and other applications, a large number of photorealistic high-quality images are generated in advance, taking a considerable amount of time (sometimes several hours or days) per image, based on a high-resolution shape model of the entire environment and the conditions of complex optical phenomena (light reflection, scattering, refraction, absorption, etc.) calculated strictly according to the rendering equations. These are then displayed as animations in sequence, a method known as offline (pre-) rendering. As the processing performance of GPUs improves in the future, the range of techniques that can be used for real-time rendering will expand, and the quality of images can be improved to some extent, but the high quality of images produced by off-line rendering is still far from being achieved. In particular, physics-based rendering, which is strictly based on complex optical phenomena such as the effects of indirect and concentrated light, and fluid simulation, in which the shape of the fluid changes freely in space, have yet to establish an efficient method for producing realistic, high-quality images, thus hindering real-time rendering quality improvement.

Our research aims to establish a physics-based rendering method that efficiently generates realistic and high-quality video information based strictly on complex optical phenomena.

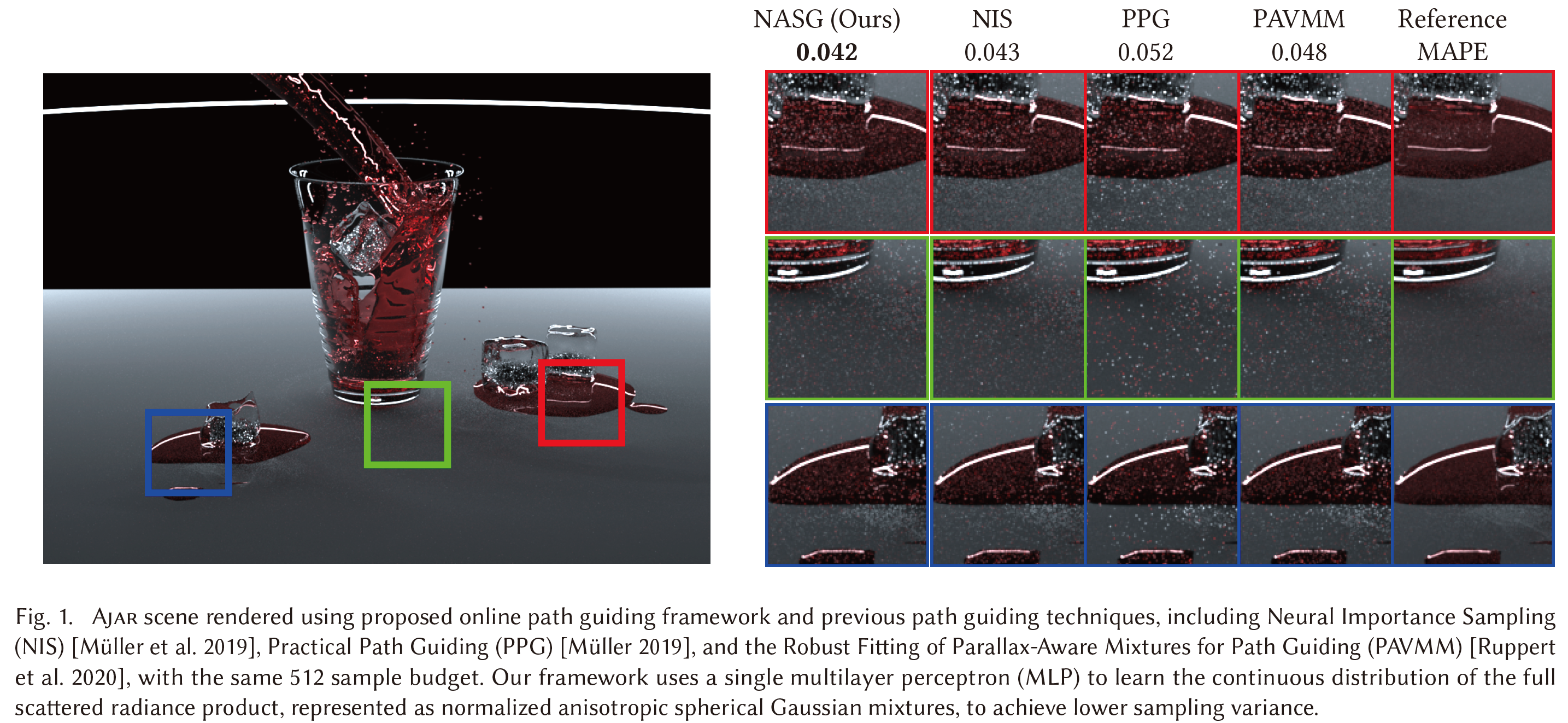

In order to produce realistic, high-quality images of indoor environments and the like, physics-based rendering must be based strictly on complex optical phenomena such as colour blurring and condensation effects caused by indirect light in a room. In physics-based rendering, images are generated by solving the rendering equations that formulate the energy propagation of light according to the path taken by the light, and the Monte Carlo method, which uses probability theory to perform integral calculations without discretisation, has recently been used as a solution method. However, the computational efficiency is low because a large number of samples are required before convergence of the solution. To improve efficiency, importance sampling, where sampling is carried out according to a certain probability density function, has been used, with attempts including explicit sampling for light sources and cameras, and selecting directions with high probability of strong reflection or refraction to increase the frequency of high-energy paths. A method called path guiding has also been proposed to reduce the number of samples by using pre-estimated radiance distributions to construct efficient paths with low dispersion. Even so, however, errors tend to be large in cases such as indirect twilight in a room with multiple complex light paths, because a sufficient number of samples cannot be prepared in the first place.

To address this problem, we proposed a new online learning-based path guiding method that efficiently learns the radiance distribution in 3D space using a small-scale Neural Network while performing a single rendering task. In order to improve the learning accuracy and reduce the computational load, a new highly expressive spherical distribution function (Normalised Anisotropic Spherical Gaussians) is proposed as the basic sampling distribution function. These allow images of the same quality to be obtained with only a fraction to a tenth of the number of samples compared to conventional methods, increasing efficiency by a factor of several tens of times. The execution efficiency of our method is more than three times higher than that of conventional Neural Network-based methods, and is a step towards the practical application of Neural Networks for rendering.

The research results were published in ACM Transactions on Graphics, the scientific journal of the ACM (Association for Computing Machinery), and presented orally at the top conference for computer graphics and interactive technology. SIGGRAPH 2024 (held in Denver, USA, in August 2024). The cover image of Volume 43, Issue 3, in which the results of this research were published, used an image from our paper.

Also invited as invited talks at the SIGGRAPH Invited Talk Session at Visual Computing 2024, which is the most prestigious academic research symposium on computer graphics and related fields in Japan.

テーマ

Generation of Realistic Cyber/Virtual Space by Physically-based Rendering with Normalised Anisotropic Spherical Gaussians

主な研究成果・対外発表

Results in Japanese are described in Japanese.